The Allure of Parallel Processing

Success in science hinges upon data, and data generation is dependent upon speed and quality. In turn, speed and quality are dependent on how you process samples. Manual processing is wrought with time-consuming bottlenecks and risks of error and variation.

Automated systems offer precise, accurate high-throughput processing, but so many of these systems are limited to single protocol processing. This imposes a defined cap on overall discovery and sample processing speed. In some cases, labs may opt to purchase additional hardware, which takes up precious lab space and burns through capital as well as ongoing maintenance funds.

Instead, parallel processing enables multiple, different assays to be run at once on a single automated system. By doing so, labs can maximize sample processing throughput and rapidly reap insight-generating data without sacrificing quality. The speed and quality empower today’s savvy research organizations, especially those in drug discovery and genomics, to achieve new heights of success faster than their competitors.

On top of this, parallel processing enables researchers to address diverse experiments, with dissimilar parameters, conditions, and samples, more efficiently than single processing. Assay replicates and controls can be run in parallel to improve statistical validity and data quality. This minimizes experimental variability and improves reproducibility, especially in applications where accuracy and consistency are critical yet fragile.

The Importance of Lab Automation Software in Parallel Processing

Devices are not the only factor to consider when seeking to increase speed and quality through parallel processing. In fact, hardware, software, and people comprise a three-pronged approach to automation. Learn more about this approach in our blog, “Lab Automation’s Cogent Trichotomy”.

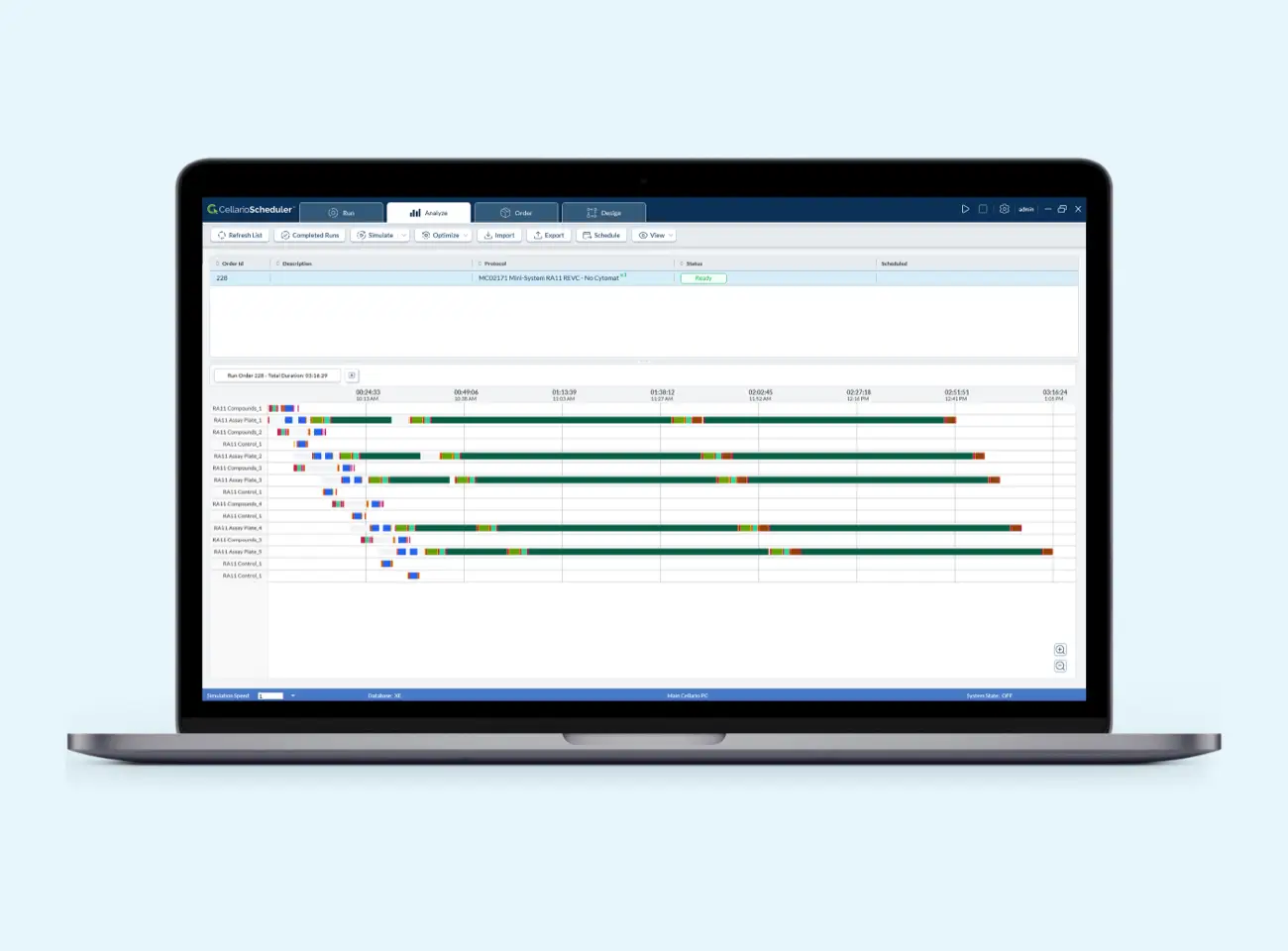

With that said, the limitations of single protocol processing may often be a factor of the automated system’s scheduling software. In fact, many software packages can schedule a simple, single biochemical assay, but very few can run simultaneous protocols in parallel. Impacts may manifest downstream as increased waiting time for data to drive an analysis cycle.

The right software, such as Cellario whole lab automation software, can facilitate single and parallel processing as the needs arise. And it can grow and change along with your evolving needs.

What’s more, the automation and scheduling software should support quality data through adherence to FAIR Principles published by GO FAIR. These principles aim to improve scientific data management and stewardship in the Digital Age, especially analytical, event-based, and meta data harvested from automated systems. In essence, data should be findable, accessible, interoperable, and reusable.

Evaluating Scheduling and Management Software for Parallel Processing

Parallel processing is necessary to convert your lab into a highly functional data factory. In addition to evaluating hardware, it’s important to conduct due diligence on the system’s scheduling and management software to be sure that it can perform to your current and future expectations. As you define your requirements and evaluate options, here are some factors to consider.

- Features and Functionality – In addition to comparing software features against your scientific and technical requirements, evaluate the software provider’s product development roadmap. This will help you to gauge the provider’s long-term commitment to the software and, by the same token, your ongoing success. Look for customizable templates, scheduling algorithms and ease of parallel processing setup, automated updates, and reporting/analytical tools.

- Scalability and Flexibility – Your science continues to evolve and so should your whole lab automated software. To benefit from maximum scalability over time, your software should scale processing in parallel and work well with simple to highly complex assays. It should also easily handle changes over time such as increased workload and data generation, support for multiple users, and adapting to changing scheduling needs.

- Integration and Compatibility – In today’s ever-demanding data generation and handling environment, your ideal software should have a robust, open, and extensible application programming interface (API) architecture. API are small bits of code that enable device and software communication throughout the automated system. This will determine how the whole lab automation software integrates with existing software platforms as well as its compatibility with existing infrastructure such as laboratory information management systems (LIMS), databased, project management tools, and more.

- User Experience and Training – Most software providers will assert that their platforms are intuitive, easy to navigate, and require minimal training. To truly differentiate from among this sea of sameness, it’s invaluable to hear feedback from neutral third-party software users. Inquire about how they are using the software, how they would rate their experience, and how they customized the software to satisfy their unique needs. Also, ask the provider and their users about training programs and post-installation support.

- Cost and Return on Investment – Consider not only the initial software costs, but ongoing fees for application or feature enhancements, licenses, annual maintenance costs, customizations, and other expenses. Add other budget-impacting elements such as streamlined workflows, increased system uptime, reduced need for reworked or repeated assays, improved resource utilization, and expanded productivity. Is the software worth the investment?

And of course, a hands-on demonstration is extremely useful to truly gauge the software’s utility and ease of operation in context of your lab’s needs.

Parallel Processing is Key

Parallel processing is a critical capability as it improves the efficiency of sample processing and resource utilization, reduces time to discovery, and speeds your lab’s ability to discover and generate data. In turn, you can identify targets faster, present findings earlier than your competition, and achieve new heights of success.

To learn more about parallel processing, read our blog, “Parallel Processing: The Key to Productivity and Fast Results”.

And as you enter advanced discussions with an automated system scheduling and management software provider, consider asking these final questions:

- Can the software handle parallel processing of multiple, different workflows? What if the workflows have multiple threads?

- Can this be done natively in the software, or do you need to run multiple instances of the software?

- Can a new experiment be started at any time while the system is running another process, or are users required to wait for long gaps or incubations in the system’s utilization?

- How does the system track active samples across multiple workflows, and can you guarantee that plates won’t get mixed up?

- How is error recovery handled when there are multiple workflows and plates on the system at one time?

As always, we welcome you to connect with us to learn more about Cellario whole lab automation software. We’re happy to answer the aforementioned questions and many more, and we look forward to providing you with a personalized demonstration.

Revision: BL-DIG-230714-01_RevA